Seeing the future digitally: Computer vision in trauma and orthopaedics

Authors: Ali Ridha and Aditya Vijay

Seeing the future digitally: Computer vision in trauma and orthopaedics

Ali Ridha is currently an Orthopaedic research fellow at the South West London Elective Orthopaedic Centre (SWLEOC) where he is involved in projects involving Artificial intelligence in Orthopaedics. He is also an honorary research fellow at the University of Warwick.

Aditya Vijay is currently an orthopaedic research fellow at South West London Elective Orthopaedic Centre (SWLEOC) where he is involved in AI projects in Orthopaedics, focussing mainly on predictive analytics and computer vision.

Artificial intelligence (AI) has significantly advanced since its theoretical roots in the 1940s, evolving from simple shape and pattern recognition to handling complex visual and language tasks1,2.

The development of deep learning, especially following a landmark achievement in the 2012 ImageNet competition, propelled AI applications into realms previously dominated by human cognition, such as image recognition and analysis3. This breakthrough fostered the growth of computer vision (CV), a branch of AI that enables machines to interpret and analyse visual data in ways that can support or even exceed human capabilities in specific tasks.

In trauma and orthopaedics, CV is showing promise, but it remains relatively new. Tools like BoneView™ are helping clinicians by identifying fractures on X-rays, while technologies like VERASENSE™ assist in real-time alignment of implants during surgery4. Although these tools highlight what’s possible, CV’s routine use in orthopaedic practice is only just starting to take hold5,6. Interest in CV is growing, fuelled by an ever-increasing volume of healthcare data. Patient imaging, surgical records, and electronic health data all contribute to a rich pool of information that can train and refine these AI models5.

As CV becomes more integrated into clinical practice, we must address critical hurdles related to data privacy, availability, and safety to ensure the technology’s safe and effective use. With new draft NICE Guidelines recommending the use of AI for fracture detection in urgent care7, orthopaedics stands at the brink of an AI transformation. The prospect of CV integrated into day-to-day orthopaedic workflows hints at a future where musculoskeletal care becomes more efficient and precise, helping clinicians make faster, data-driven decisions that ultimately improve patient outcomes6,8.

Why AI and computer vision?

In orthopaedic surgery, variability in how surgeons interpret fractures is a well-known issue. Human bias contributes to inconsistencies in fracture recognition and classification across trauma cases, affecting injuries from both upper and lower limbs9,10. However, recent advancements in AI, particularly in CV, offer potential to enhance both the speed and reliability of these assessments, providing tools that can standardise diagnostic accuracy and mitigate subjective variability5.

CV’s underlying technology—complex neural networks—makes it particularly suited for medical image processing11,12. Unlike conventional machine learning (ML) models like decision trees or support vector machines, CV relies on deep neural networks that use convolutional layers, allowing the model to identify and respond to local spatial and temporal patterns in images11,12. These convolutional layers, particularly in Convolutional Neural Networks (CNNs), perform filtering operations that recognise edges, shapes, and textures, making them ideal for analysing intricate features in radiographs and CT scans5,13. This architecture enables CV models to efficiently process massive volumes of image data while requiring fewer computational resources. Rather than replacing human judgment, CV functions to support surgeons and radiologists by providing consistent, bias-reduced insights and streamlining clinical decision-making5,6,13.

Current applications of computer vision

In orthopaedics, CV models have shown promise for fracture detection and classification on radiographs and CT scans, offering enhanced accuracy and efficiency1,2. Other medical fields have seen similar benefits; for instance, clinicians are employing CV for mammogram analysis in early breast cancer detection, fundoscopy for diagnosing papilledema, and CT imaging to identify intracerebral haemorrhages13. These applications streamline diagnostic workflows, allowing for timely and more accurate interventions.

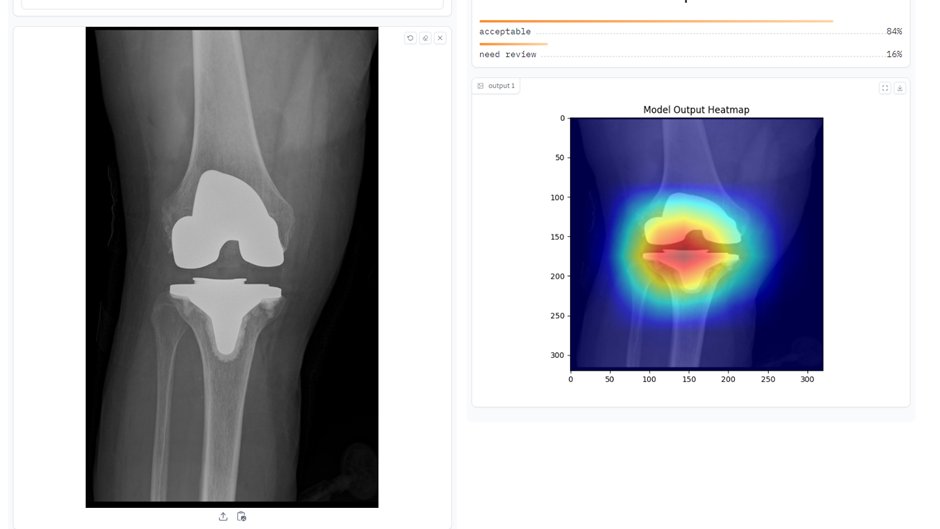

At the Southwest London Elective Orthopaedic Centre (SWLEOC), an ML model has been designed to screen postoperative arthroplasty X-rays. The model generates heat maps overlaid on the images, visually highlighting areas of interest that it prioritises during analysis. This technique is called saliency mapping and not only aids in identifying potential postoperative issues, but also provides clinicians with a clearer understanding of the model’s interpretive process and supporting informed decision-making.

A wound assessment tool to streamline postoperative wound assessment in total knee arthroplasty (TKA) patients using computer vision is also currently being developed. This initiative aims to develop and validate a deep learning algorithm that categorises wounds. By leveraging labelled wound images and expert input, the algorithm aspires to provide a rapid, accurate assessment tool that aligns with clinical standards. The project’s ultimate goal is to empower patients with an accessible tool, allowing early intervention for wound complications while reducing the follow-up burden on healthcare professionals.

Figure 1: CV model for evaluating post-arthroplasty images.

How does computer vision work?

Imagine teaching a child to identify a banana. By showing them bananas of different shapes, sizes, and colours, they eventually recognise a banana by focusing on essential features like its shape, curvature, and colour. Similarly, CV operates by ‘learning’ from numerous labelled images. A CV model is trained on extensive datasets containing annotated images (e.g., ‘fracture’ or ‘no fracture’), learning to identify the unique characteristics that define each category. This training allows CV systems to process visual information and ‘see’ in a way that can augment clinical diagnosis1,13.

CV algorithms operate through several key technical stages:

1. Classification: The CV model sorts images into predefined classes, such as identifying an image as depicting a ‘fractured’ or ‘non-fractured’ bone. This process involves the model learning to detect patterns that distinguish between these classes, often relying on thousands to millions of labelled examples.

2. Object Detection: Beyond merely categorising an image, object detection identifies and locates specific objects or areas of interest within the image. For instance, the model may pinpoint the exact location of a fracture line within an X-ray.

3. Segmentation: Segmentation divides an image into multiple distinct segments or regions, enabling the model to isolate specific parts, such as bones or joint spaces in an orthopaedic X-ray.

4. Feature Extraction: This stage involves identifying key characteristics, such as edges, textures, or specific shapes, within an image that are relevant to the diagnosis. This extraction process allows the model to develop a nuanced understanding of the image, supporting clinical tasks like fracture detection or implant alignment. Following training, the model undergoes a testing phase where its accuracy and generalisability are evaluated using a separate dataset. This testing helps ensure that the model performs reliably in real-world clinical applications13,14. For example, models developed to identify fractures in training images must demonstrate the same accuracy when applied to new images from different institutions or patient populations. This need for rigorous external validation and continuous refinement highlights the complexity of implementing CV in clinical settings.

As CV continues to evolve, so too does its potential to support orthopaedic care, particularly in trauma settings. CV’s ability to consistently and accurately interpret visual data could transform diagnosis, procedural planning, and post-operative monitoring. However, as promising as these tools are, additional clinical trials, regulatory oversight, and improvements in data quality and reporting standards will be necessary to ensure CV models perform reliably and safely across diverse clinical environments.

Future perspectives in orthopaedic computer vision

As computer vision (CV) continues to integrate into orthopaedic practice, its future holds substantial promise but is also met with critical challenges. While tools like BoneView™ and VERASENSE™ illustrate CV’s potential for fracture detection and surgical alignment13, broader applications require scalable data solutions, advanced multi-tasking capabilities, and regulatory advancements to maximise CV’s clinical impact.

One of the foremost challenges to CV adoption in orthopaedics is the availability and diversity of large-scale annotated datasets. Manual video labelling, especially for complex procedures like arthroplasty or fracture reduction, demands significant time and resources, limiting scalability14,15. Interactive and semi-automatic labelling tools are emerging as viable solutions, enabling trained novices to label surgical videos with accuracy comparable to specialists13–15. Applying these methods in orthopaedics could accelerate data generation, supporting more robust model training without overwhelming clinical teams.

Despite these advancements, most CV implementations in orthopaedics remain at the feasibility or proof-of-concept stage. Key hurdles include strict data privacy and security requirements, ethical considerations, and the inherent complexities of integrating CV into existing healthcare IT systems. Effective CV models require diverse datasets to ensure accurate, generalisable algorithms, yet most training data is sourced from single institutions. This localism limits the broader applicability of models and raises challenges for external validation, especially given the logistical and privacy constraints associated with sharing sensitive patient data like X-rays across institutions.

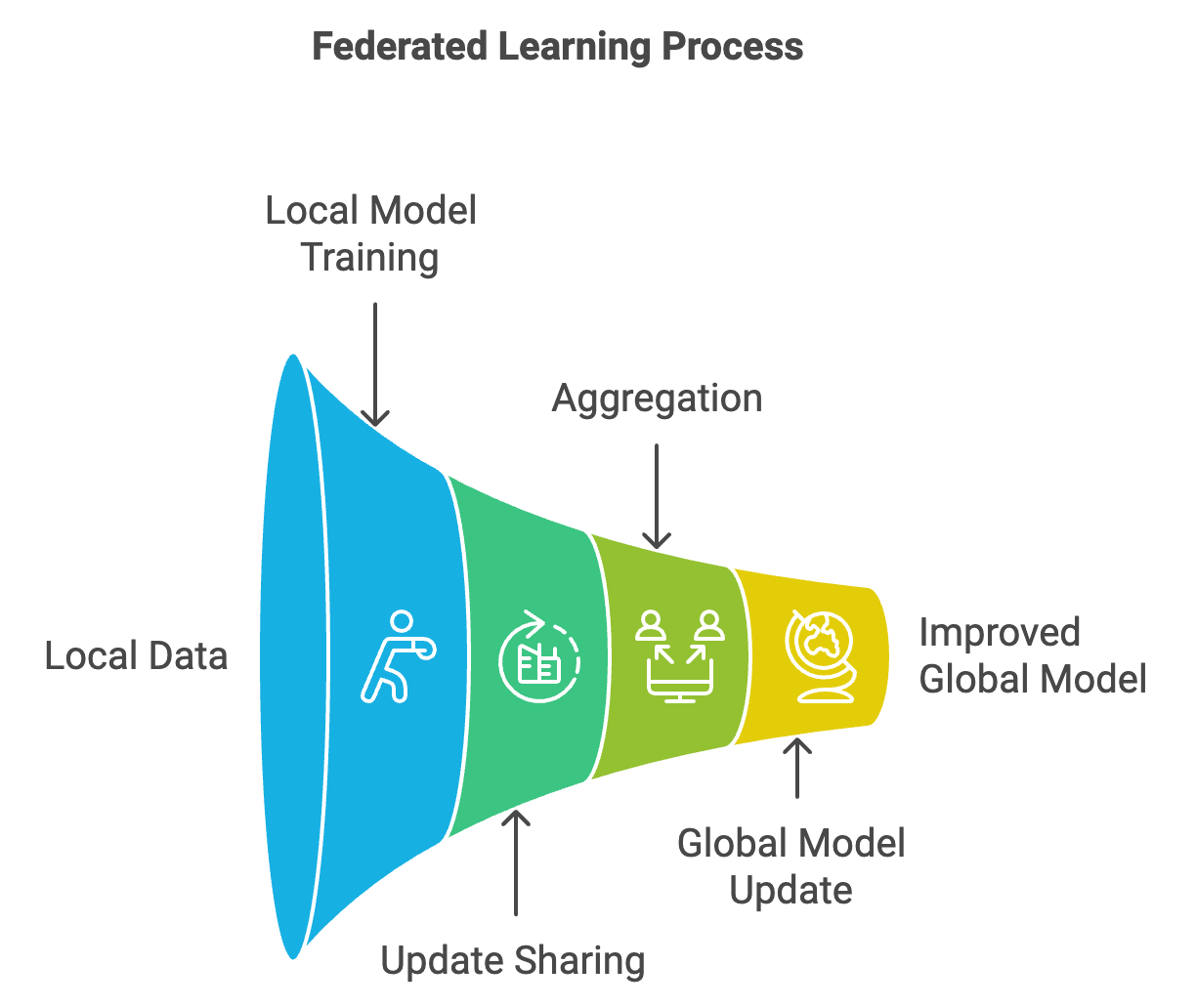

Federated learning offers a potential solution to these data limitations. By allowing institutions to collaboratively train a shared model without exchanging raw data, federated learning enables hospitals to maintain patient privacy while contributing to a collective, diversified dataset16,17. For example, hospitals across regions can train a CV model on local orthopaedic X-ray images to identify fractures or assess joint degeneration. Rather than transferring sensitive images, each institution shares only model updates — patterns learned from local data — back to a central server, thereby enhancing accuracy and generalisability without compromising patient confidentiality.

Figure 3: Federated model concept

Future CV models will need multi-tasking capabilities to handle various orthopaedic conditions — fractures, joint dislocations, and soft tissue injuries — instead of focusing on isolated tasks. Although CNNs have shown strong performance in fracture detection, challenges remain in identifying subtle or occult fractures, such as scaphoid fractures, where CV models struggle to surpass the accuracy of experienced orthopaedic surgeons18. Prospective trials and multi-centre validation studies are essential to ensure that these models are reliable and generalisable across diverse patient populations and clinical environments. As CV technology matures, its ability to assist orthopaedic surgeons in diagnostic accuracy and procedural planning will likely expand. By addressing the technical, ethical, and practical barriers of CV integration through innovative approaches like federated learning and improved multi-tasking architectures, CV has the potential to transition from an emerging technology to an integral component of orthopaedic workflows. Ultimately, CV in orthopaedics will complement human expertise — enhancing diagnostic consistency, minimising bias, and enabling surgeons to make data-driven decisions that improve patient outcomes.

References

- Lopez CD, Gazgalis A, Boddapati V, Shah RP, Cooper HJ, Geller JA. Artificial Learning and Machine Learning Decision Guidance Applications in Total Hip and Knee Arthroplasty: A Systematic Review. Arthroplast Today 2021;11:103-12.

- Yin J, Ngiam KY, Teo HH. Role of Artificial Intelligence Applications in Real-Life Clinical Practice: Systematic Review. J Med Internet Res 2021;23:e25759.

- Coppola A, Asopa V. A practical approach to artificial intelligence in trauma and orthopaedics. Journal of Trauma and Orthopaedics. 2024;12(2):30-2.

- Ozkaya E, Topal FE, Bulut T, Gursoy M, Ozuysal M, Karakaya Z. Evaluation of an artificial intelligence system for diagnosing scaphoid fracture on direct radiography. Eur J Trauma Emerg Surg 2022;48:585-92.

- Olveres J, González G, Torres F, Moreno-Tagle JC, Carbajal-Degante E, Valencia-Rodríguez A, et al. What is new in computer vision and artificial intelligence in medical image analysis applications. Quant Imaging Med Surg 2021;11:3830-53.

- Huffman N, Pasqualini I, Khan ST, Klika AK, Deren ME, Jin Y, et al. Enabling Personalized Medicine in Orthopaedic Surgery Through Artificial Intelligence: A Critical Analysis Review. JBJS Reviews 2024;12(3):e23.00232.

- Tiwari T, Tiwari T, Tiwari S. How Artificial Intelligence, Machine Learning and Deep Learning are Radically Different? International Journal of Advanced Research in Computer Science and Software Engineering 2018;8:1.

- Saggi SS, Kuah LZD, Toh LCA, Shah MTBM, Wong MK, Bin Abd Razak HR. Optimisation of postoperative X-ray acquisition for orthopaedic patients. BMJ Open Quality 2022;11:e001216.

- Thomas D. How AI and convolutional neural networks can revolutionize orthopaedic surgery. J Clin Orthop Trauma 2023;40:102165.

- Oosterhoff JHF, Doornberg JN. Artificial intelligence in orthopaedics: false hope or not? A narrative review along the line of Gartner’s hype cycle. EFORT Open Rev. 2020;5(10):593-603.

- Cheikh Youssef S, Hachach-Haram N, Aydin A, Shah TT, Sapre N, Nair R, et al. Video labelling robot-assisted radical prostatectomy and the role of artificial intelligence (AI): training a novice. J Robot Surg 2023;17(2):695-701.

- Udegbe FC, Ebulue OR, Ebulue CC, Ekesiobi CS. THE ROLE OF ARTIFICIAL INTELLIGENCE IN HEALTHCARE: A SYSTEMATIC REVIEW OF APPLICATIONS AND CHALLENGES. International Medical Science Research Journal 2024;4:500-8.

- Lauter, K. (2022). Private AI: Machine Learning on Encrypted Data. In: Chacón Rebollo, T., Donat, R., Higueras, I. (eds) Recent Advances in Industrial and Applied Mathematics. SEMA SIMAI Springer Series, Vol 1. Springer, Cham.

- Olveres J, González G, Torres F, Moreno-Tagle JC, Carbajal-Degante E, Valencia-Rodríguez A, et al. What is new in computer vision and artificial intelligence in medical image analysis applications. Quant Imaging Med Surg. 2021;11(8):3830-53.

- National Institue for Health and Care Excellence. AI technologies recommended for use in detecting fractures. 2024. Available at: www.nice.org.uk/news/articles/ai-technologies-recommended-for-use-in-detecting-fractures.

- Truhn D, Tayebi Arasteh S, Saldanha OL, Müller-Franzes G, Khader F, Quirke P, et al. Encrypted federated learning for secure decentralized collaboration in cancer image analysis. Med Image Anal. 2024;92:103059.

- Moskal JT, Diduch DR. Postoperative radiographs after total knee arthroplasty: a cost-containment strategy. Am J Knee Surg 1998;11(2):89-93.

- Babu VD, Malathi K. Dynamic Deep Learning Algorithm (DDLA) for Processing of Complex and Large Datasets. 2022 Second International Conference on Artificial Intelligence and Smart Energy (ICAIS), Coimbatore, India, 2022, pp. 336-342.